Rendering directly to a video file in Blender is not always useful. More often, a sequence (a set) of frames is received after render, which then, for example, after additional post-processing, must be assembled to the final video file.

We can compile a sequence of frames into a video with Blender itself, with the Visual Screen Editor (VSE). Or we can use third-party codecs, for example, FFmpeg.

First, we need to download and install the FFmpeg codec.

We can download the codec from the official FFmpeg website: https://ffmpeg.org/download.html

Windows users can download the codec from GitHub. This repository is linked from the official website: https://github.com/BtbN/FFmpeg-Builds/releases

The codec does not require installation, just unzip the downloaded archive to a convenient location, for example, to the “Program Files” directory.

Assembling a sequence of frames to a video

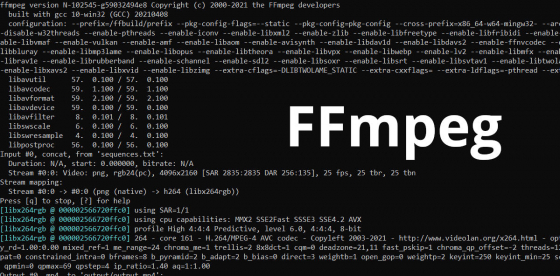

The codec is used from the command line.

Press the “Win + r” key combination, type “cmd” (without quotes), and press “Enter” – a console window with a command line will open.

To call the codec, we need to specify the full path to its executable file, which is located in the “bin” directory. For example, if we unpacked the codec to the “C:\Program Files\ffmpeg” directory:

|

1 |

"c:\Program Files\ffmpeg\bin\ffmpeg.exe" |

Please note – quotes are required here because there is a space in the command being executed.

Next, we need to specify the parameters for the codec:

First of all, we need to specify the location of the directory with the source frames.

|

1 |

-i frames/%%4d.png |

We must specify the full or relative path to the directory where Blender rendered frames for animation.

Relative paths are specified from the command line root. We can change the root of the command line with the “cd” command.

In this example, the relative path to the “frames” directory is specified.

|

1 |

"c:\Program Files\ffmpeg\bin\ffmpeg.exe" -i frames\%%4d.png |

Blender saves rendered image files with 4-digit frame numbers in the names: 0001, png, 0002.png, etc. Therefore, for all frames to be processed by the FFmpeg codec, we specify the file-name mask “%%4d.png”.

Blender saves the first frame as 0001.png while the codec starts counting frames from 0. So, we need to specify the start frame in the parameters.

|

1 |

"c:\Program Files\ffmpeg\bin\ffmpeg.exe" -start_number 1 -i frames\%%4d.png |

It is often necessary to compile several sequences of frames in a video, from different directories. For example, the first directory could consist of the color frames and the second – of the transparency mask frames.

To specify several directories with frames for the codec, the paths to them must be written to a text file. The path to each directory must be placed on a new line. For example, for two source directories with “color” and “alpha” frames, the file will look like this:

|

1 2 |

file 'color\%4d.png' file 'alpha\%4d.png' |

We can prepare such a file manually, or we can quickly create it from the command line by running the following two commands:

|

1 2 |

echo file 'color\%%4d.png' > sequences.txt echo file 'alpha\%%4d.png' >> sequences.txt |

After that, we can call the codec, specifying the created file as the source:

|

1 |

"c:\Program Files\ffmpeg\bin\ffmpeg.exe" -f concat -i sequences.txt |

The codec can through an “Unsafe file name” error. To fix it, add a parameter to resolve unsafe file names:

|

1 |

-safe 0 |

All together:

|

1 |

"c:\Program Files\ffmpeg\bin\ffmpeg.exe" -f concat -safe 0 -i sequences.txt |

Also, we need to specify the directory where the finished video will be saved:

|

1 |

"c:\Program Files\ffmpeg\bin\ffmpeg.exe" -f concat -safe 0 -i sequences.txt output\output.mp4 |

In the FFmpeg coded parameters, we can specify the compression level:

|

1 |

-crf 10 |

The higher the value of the parameter we set – the higher the compression level we will use, respectively, the size of the finished file will be smaller, and the quality will be worse.

If the final video requires the “lossless” quality, we need to set the value to 0. The size of the finished file in this case can be very large.

A value of about 17 is considered “visually lossless”.

|

1 |

"c:\Program Files\ffmpeg\bin\ffmpeg.exe" -f concat -safe 0 -i sequences.txt -crf 10 output\output.mp4 |

The packaging preset also affects the size of the finished video. It is also specified in the parameters and has the following meanings:

|

1 2 3 4 5 6 7 8 9 |

ultrafast superfast veryfast faster fast medium slow slower veryslow |

When choosing the “ultrafast” preset, the file size will be large, and the time spent on processing and packing it will be minimal. If we select the “veryslow” value, the finished video file will take longer to compile, but its size will be smaller.

|

1 |

"c:\Program Files\ffmpeg\bin\ffmpeg.exe" -f concat -safe 0 -i sequences.txt -preset veryslow -crf 10 output\output.mp4 |

For compatibility with “QuickTime” players, we can set the following “format” parameter:

|

1 |

-vf format=yuv420p |

To use the H.264 encoder, we need to specify it in the parameters:

|

1 |

-c:v libx264rgb |

To set the required fps frequency, we need to add the “r ‘parameter, specifying the desired number of frames per second:

|

1 |

-r 60 |

Not to ask for overwriting an existing output file, we can add the “-y” parameter:

|

1 |

-y |

The final command:

|

1 |

"c:\Program Files\ffmpeg\bin\ffmpeg.exe" -y -f concat -safe 0 -r 60 -i sequences.txt -c:v libx264rgb -preset veryslow -crf 10 -vf format=yuv420p -r 60 output/output.mp4 |

By calling this command from the command line, we will execute the FFmpeg codec to assemble video from the sequence of frames with the specified parameters.

For my Patreon subscribers:

If there are already two directories with *.png frames in your project folder: “color” (frames in color) and “alpha” (frames with the alpha mask), just copy the downloaded file there and execute it. The finished video will be placed in the “output” directory.

Parameters set in this file (maximum quality): H.264, compression “lossless”, 60 fps, preset “veryslow”.

If necessary, you can edit this file in the text editor, and set the required parameters.

Content on Patreon

Content on Patreon